More on Entrepreneurship/Creators

cdixon

3 years ago

2000s Toys, Secrets, and Cycles

During the dot-com bust, I started my internet career. People used the internet intermittently to check email, plan travel, and do research. The average internet user spent 30 minutes online a day, compared to 7 today. To use the internet, you had to "log on" (most people still used dial-up), unlike today's always-on, high-speed mobile internet. In 2001, Amazon's market cap was $2.2B, 1/500th of what it is today. A study asked Americans if they'd adopt broadband, and most said no. They didn't see a need to speed up email, the most popular internet use. The National Academy of Sciences ranked the internet 13th among the 100 greatest inventions, below radio and phones. The internet was a cool invention, but it had limited uses and wasn't a good place to build a business.

A small but growing movement of developers and founders believed the internet could be more than a read-only medium, allowing anyone to create and publish. This is web 2. The runner up name was read-write web. (These terms were used in prominent publications and conferences.)

Web 2 concepts included letting users publish whatever they want ("user generated content" was a buzzword), social graphs, APIs and mashups (what we call composability today), and tagging over hierarchical navigation. Technical innovations occurred. A seemingly simple but important one was dynamically updating web pages without reloading. This is now how people expect web apps to work. Mobile devices that could access the web were niche (I was an avid Sidekick user).

The contrast between what smart founders and engineers discussed over dinner and on weekends and what the mainstream tech world took seriously during the week was striking. Enterprise security appliances, essentially preloaded servers with security software, were a popular trend. Many of the same people would talk about "serious" products at work, then talk about consumer internet products and web 2. It was tech's biggest news. Web 2 products were seen as toys, not real businesses. They were hobbies, not work-related.

There's a strong correlation between rich product design spaces and what smart people find interesting, which took me some time to learn and led to blog posts like "The next big thing will start out looking like a toy" Web 2's novel product design possibilities sparked dinner and weekend conversations. Imagine combining these features. What if you used this pattern elsewhere? What new product ideas are next? This excited people. "Serious stuff" like security appliances seemed more limited.

The small and passionate web 2 community also stood out. I attended the first New York Tech meetup in 2004. Everyone fit in Meetup's small conference room. Late at night, people demoed their software and chatted. I have old friends. Sometimes I get asked how I first met old friends like Fred Wilson and Alexis Ohanian. These topics didn't interest many people, especially on the east coast. We were friends. Real community. Alex Rampell, who now works with me at a16z, is someone I met in 2003 when a friend said, "Hey, I met someone else interested in consumer internet." Rare. People were focused and enthusiastic. Revolution seemed imminent. We knew a secret nobody else did.

My web 2 startup was called SiteAdvisor. When my co-founders and I started developing the idea in 2003, web security was out of control. Phishing and spyware were common on Internet Explorer PCs. SiteAdvisor was designed to warn users about security threats like phishing and spyware, and then, using web 2 concepts like user-generated reviews, add more subjective judgments (similar to what TrustPilot seems to do today). This staged approach was common at the time; I called it "Come for the tool, stay for the network." We built APIs, encouraged mashups, and did SEO marketing.

Yahoo's 2005 acquisitions of Flickr and Delicious boosted web 2 in 2005. By today's standards, the amounts were small, around $30M each, but it was a signal. Web 2 was assumed to be a fun hobby, a way to build cool stuff, but not a business. Yahoo was a savvy company that said it would make web 2 a priority.

As I recall, that's when web 2 started becoming mainstream tech. Early web 2 founders transitioned successfully. Other entrepreneurs built on the early enthusiasts' work. Competition shifted from ideation to execution. You had to decide if you wanted to be an idealistic indie bar band or a pragmatic stadium band.

Web 2 was booming in 2007 Facebook passed 10M users, Twitter grew and got VC funding, and Google bought YouTube. The 2008 financial crisis tested entrepreneurs' resolve. Smart people predicted another great depression as tech funding dried up.

Many people struggled during the recession. 2008-2011 was a golden age for startups. By 2009, talented founders were flooding Apple's iPhone app store. Mobile apps were booming. Uber, Venmo, Snap, and Instagram were all founded between 2009 and 2011. Social media (which had replaced web 2), cloud computing (which enabled apps to scale server side), and smartphones converged. Even if social, cloud, and mobile improve linearly, the combination could improve exponentially.

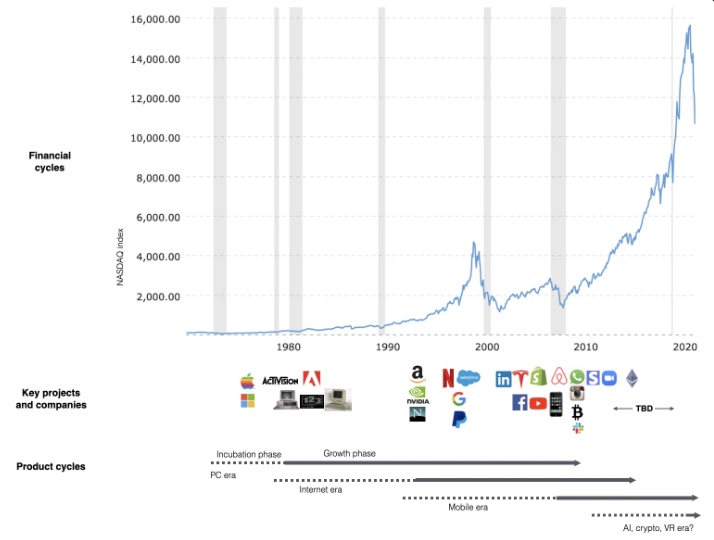

This chart shows how I view product and financial cycles. Product and financial cycles evolve separately. The Nasdaq index is a proxy for the financial sentiment. Financial sentiment wildly fluctuates.

Next row shows iconic startup or product years. Bottom-row product cycles dictate timing. Product cycles are more predictable than financial cycles because they follow internal logic. In the incubation phase, enthusiasts build products for other enthusiasts on nights and weekends. When the right mix of technology, talent, and community knowledge arrives, products go mainstream. (I show the biggest tech cycles in the chart, but smaller ones happen, like web 2 in the 2000s and fintech and SaaS in the 2010s.)

Tech has changed since the 2000s. Few tech giants dominate the internet, exerting economic and cultural influence. In the 2000s, web 2 was ignored or dismissed as trivial. Entrenched interests respond aggressively to new movements that could threaten them. Creative patterns from the 2000s continue today, driven by enthusiasts who see possibilities where others don't. Know where to look. Crypto and web 3 are where I'd start.

Today's negative financial sentiment reminds me of 2008. If we face a prolonged downturn, we can learn from 2008 by preserving capital and focusing on the long term. Keep an eye on the product cycle. Smart people are interested in things with product potential. This becomes true. Toys become necessities. Hobbies become mainstream. Optimists build the future, not cynics.

Full article is available here

Desiree Peralta

3 years ago

Why Now Is Your Chance To Create A Millionaire Career

People don’t believe in influencers anymore; they need people like you.

Social media influencers have dominated for years. We've seen videos, images, and articles of *famous* individuals unwrapping, reviewing, and endorsing things.

This industry generates billions. This year, marketers spent $2.23 billion on Instagram, $1 million on Youtube, and $775 million on Tiktok. This marketing has helped start certain companies.

Influencers are dying, so ordinary people like us may take over this billion-dollar sector. Why?

Why influencers are perishing

Most influencers lie to their fans, especially on Instagram. Influencers' first purpose was to make their lives so flawless that others would want to buy their stuff.

In 2015, an Australian influencer with 600,000 followers went viral for revealing all her photos and everything she did to seem great before deleting her account.

“I dramatically edited the pictures, I manipulated the environements, and made my life look perfect in social media… I remember I obsessively checked the like count for a full week since uploading it, a selfie that now has close to 2,500 likes. It got 5 likes. This was when I was so hungry for social media validation … This was the reason why I quit social media: for me, personally, it consumed me. I wasn’t living in a 3D world.”

Influencers then lost credibility.

Influencers seem to live in a bubble, separate from us. Thanks to self-popularity love's and constant awareness campaigns, people find these people ridiculous.

Influencers are praised more for showing themselves as natural and common than for showing luxuries and lies.

Little by little, they are dying, making room for a new group to take advantage of this multi-million dollar business, which gives us (ordinary people) a big opportunity to grow on any content creation platform we want.

Why this is your chance to develop on any platform for creating content

In 2021, I wrote “Not everyone who talks about money is a Financial Advisor, be careful of who you take advice from,”. In it, I warned that not everyone with a large following is a reputable source of financial advice.

Other writers hated this post and said I was wrong.

People don't want Jeff Bezos or Elon Musk's counsel, they said. They prefer to hear about their neighbor's restroom problems or his closest friend's terrible business.

Real advice from regular folks.

And I found this was true when I returned to my independent YouTube channel and had more than 1000 followers after having abandoned it with fewer than 30 videos in 2021 since there were already many personal finance and travel channels and I thought mine wasn't special.

People appreciated my videos because I was a 20-something girl trying to make money online, and they believed my advice more than that of influencers with thousands of followers.

I think today is the greatest time to grow on any platform as an ordinary person. Normal individuals give honest recommendations about what works for them and look easier to make because they have the same options as us.

Nobody cares how a millionaire acquired a Lamborghini unless it's entertaining. Education works now. Real counsel from average people is replicable.

Many individuals don't appreciate how false influencers seem (unreal bodies and excessive surgery and retouching) since it makes them feel uneasy.

That's why body-positive advertisements have been so effective, but they've lost ground in places like Tiktok, where the audience wants more content from everyday people than influencers living amazing lives. More people will relate to your content if you appear genuine.

Last thoughts

Influencers are dwindling. People want more real people to give real advice and demonstrate an ordinary life.

People will enjoy anything you tell about your daily life as long as you provide value, and you can build a following rapidly if you're honest.

This is a millionaire industry that is getting more expensive and will go with what works, so stand out immediately.

Stephen Moore

3 years ago

Adam Neumanns is working to create the future of living in a classic example of a guy failing upward.

The comeback tour continues…

First, he founded a $47 billion co-working company (sorry, a “tech company”).

He established WeLive to disrupt apartment life.

Then he created WeGrow, a school that tossed aside the usual curriculum to feed children's souls and release their potential.

He raised the world’s consciousness.

Then he blew it all up (without raising the world’s consciousness). (He bought a wave pool.)

Adam Neumann's WeWork business burned investors' money. The founder sailed off with unimaginable riches, leaving long-time employees with worthless stocks and the company bleeding money. His track record, which includes a failing baby clothing company, should have stopped investors cold.

Once the dust settled, folks went on. We forgot about the Neumanns! We forgot about the private jets, company retreats, many houses, and WeWork's crippling. In that moment, the prodigal son of entrepreneurship returned, choosing the blockchain as his industry. His homecoming tour began with Flowcarbon, which sold Goddess Nature Tokens to lessen companies' carbon footprints.

Did it work?

Of course not.

Despite receiving $70 million from Andreessen Horowitz's a16z, the project has been halted just two months after its announcement.

This triumph should lower his grade.

Neumann seems to have moved on and has another revolutionary idea for the future of living. Flow (not Flowcarbon) aims to help people live in flow and will launch in 2023. It's the classic Neumann pitch: lofty goals, yogababble, and charisma to attract investors.

It's a winning formula for one investment fund. a16z has backed the project with its largest single check, $350 million. It has a splash page and 3,000 rental units, but is valued at over $1 billion. The blog post praised Neumann for reimagining the office and leading a paradigm-shifting global company.

Flow's mission is to solve the nation's housing crisis. How? Idk. It involves offering community-centric services in apartment properties to the same remote workforce he once wooed with free beer and a pingpong table. Revolutionary! It seems the goal is to apply WeWork's goals of transforming physical spaces and building community to apartments to solve many of today's housing problems.

The elevator pitch probably sounded great.

At least a16z knows it's a near-impossible task, calling it a seismic shift. Marc Andreessen opposes affordable housing in his wealthy Silicon Valley town. As details of the project emerge, more investors will likely throw ethics and morals out the window to go with the flow, throwing money at a man known for burning through it while building toxic companies, hoping he can bank another fantasy valuation before it all crashes.

Insanity is repeating the same action and expecting a different result. Everyone on the Neumann hype train needs to sober up.

Like WeWork, this venture Won’tWork.

Like before, it'll cause a shitstorm.

You might also like

Sam Hickmann

3 years ago

What is headline inflation?

Headline inflation is the raw Consumer price index (CPI) reported monthly by the Bureau of labour statistics (BLS). CPI measures inflation by calculating the cost of a fixed basket of goods. The CPI uses a base year to index the current year's prices.

Explaining Inflation

As it includes all aspects of an economy that experience inflation, headline inflation is not adjusted to remove volatile figures. Headline inflation is often linked to cost-of-living changes, which is useful for consumers.

The headline figure doesn't account for seasonality or volatile food and energy prices, which are removed from the core CPI. Headline inflation is usually annualized, so a monthly headline figure of 4% inflation would equal 4% inflation for the year if repeated for 12 months. Top-line inflation is compared year-over-year.

Inflation's downsides

Inflation erodes future dollar values, can stifle economic growth, and can raise interest rates. Core inflation is often considered a better metric than headline inflation. Investors and economists use headline and core results to set growth forecasts and monetary policy.

Core Inflation

Core inflation removes volatile CPI components that can distort the headline number. Food and energy costs are commonly removed. Environmental shifts that affect crop growth can affect food prices outside of the economy. Political dissent can affect energy costs, such as oil production.

From 1957 to 2018, the U.S. averaged 3.64 percent core inflation. In June 1980, the rate reached 13.60%. May 1957 had 0% inflation. The Fed's core inflation target for 2022 is 3%.

Central bank:

A central bank has privileged control over a nation's or group's money and credit. Modern central banks are responsible for monetary policy and bank regulation. Central banks are anti-competitive and non-market-based. Many central banks are not government agencies and are therefore considered politically independent. Even if a central bank isn't government-owned, its privileges are protected by law. A central bank's legal monopoly status gives it the right to issue banknotes and cash. Private commercial banks can only issue demand deposits.

What are living costs?

The cost of living is the amount needed to cover housing, food, taxes, and healthcare in a certain place and time. Cost of living is used to compare the cost of living between cities and is tied to wages. If expenses are higher in a city like New York, salaries must be higher so people can live there.

What's U.S. bureau of labor statistics?

BLS collects and distributes economic and labor market data about the U.S. Its reports include the CPI and PPI, both important inflation measures.

Stephen Moore

3 years ago

Trading Volume on OpenSea Drops by 99% as the NFT Boom Comes to an End

Wasn't that a get-rich-quick scheme?

OpenSea processed $2.7 billion in NFT transactions in May 2021.

Fueled by a crypto bull run, rumors of unfathomable riches, and FOMO, Bored Apes, Crypto Punks, and other JPEG-format trash projects flew off the virtual shelves, snatched up by retail investors and celebrities alike.

Over a year later, those shelves are overflowing and warehouses are backlogged. Since March, I've been writing less. In May and June, the bubble was close to bursting.

Apparently, the boom has finally peaked.

This bubble has punctured, and deflation has begun. On Aug. 28, OpenSea processed $9.34 million.

From that euphoric high of $2.7 billion, $9.34 million represents a spectacular decline of 99%.

OpenSea contradicts the data. A trading platform spokeswoman stated the comparison is unfair because it compares the site's highest and lowest trading days. They're the perfect two data points to assess the drop. OpenSea chooses to use ETH volume measures, which ignore crypto's shifting price. Since January 2022, monthly ETH volume has dropped 140%, according to Dune.

Unconvincing counterargument.

Further OpenSea indicators point to declining NFT demand:

Since January 2022, daily user visits have decreased by 50%.

Daily transactions have decreased by 50% since the beginning of the year in the same manner.

Off-platform, the floor price of Bored Apes has dropped from 145 ETH to 77 ETH. (At $4,800, a reduction from $700,000 to $370,000). Google search data shows waning popular interest.

It is a trend that will soon vanish, just like laser eyes.

NFTs haven't moved since the new year. Eminem and Snoop Dogg can utilize their apes in music videos or as 3D visuals to perform at the VMAs, but the reality is that NFTs have lost their public appeal and the market is trying to regain its footing.

They've lost popularity because?

Breaking records. The technology still lacks genuine use cases a year and a half after being popular.

They're pricey prestige symbols that have made a few people rich through cunning timing or less-than-savory scams or rug pulling. Over $10.5 billion has been taken through frauds, most of which are NFT enterprises promising to be the next Bored Apes, according to Web3 is going wonderfully. As the market falls, many ordinary investors realize they purchased into a self-fulfilling ecosystem that's halted. Many NFTs are sold between owner-held accounts to boost their price, data suggests. Most projects rely on social media excitement to debut with a high price before the first owners sell and chuckle to the bank. When they don't, the initiative fails, leaving investors high and dry.

NFTs are fading like laser eyes. Most people pushing the technology don't believe in it or the future it may bring. No, they just need a Kool-Aid-drunk buyer.

Everybody wins. When your JPEGs are worth 99% less than when you bought them, you've lost.

When demand reaches zero, many will lose.

Blake Montgomery

3 years ago

Explaining Twitter Files

Elon Musk, Matt Taibbi, the 'Twitter Files,' and Hunter Biden's laptop: what gives?

Explaining Twitter Files

Matt Taibbi released "The Twitter Files," a batch of emails sent by Twitter executives discussing the company's decision to stop an October 2020 New York Post story online.

What's on Twitter? New York Post and Fox News call them "bombshell" documents. Or, as a Post columnist admitted, are they "not the smoking gun"? Onward!

What started this?

The New York Post published an exclusive, potentially explosive story in October 2020: Biden's Secret Emails: Ukrainian executive thanks Hunter Biden for'meeting' veep dad. The story purported to report the contents of a laptop brought to the tabloid by a Delaware computer repair shop owner who said it belonged to President Biden's second son, Hunter Biden. Emails and files on the laptop allegedly showed how Hunter peddled influence with Ukranian businessmen and included a "raunchy 12-minute video" of Hunter smoking crack and having sex.

Twitter banned links to the Post story after it was published, calling it "hacked material." The Post's Twitter account was suspended for multiple days.

Why? Yoel Roth, Twitter's former head of trust and safety, said the company couldn't verify the story, implying they didn't trust the Post.

Twitter's stated purpose rarely includes verifying news stories. This seemed like intentional political interference. This story was hard to verify because the people who claimed to have found the laptop wouldn't give it to other newspapers. (Much of the story, including Hunter's business dealings in Ukraine and China, was later confirmed.)

Roth: "It looked like a hack and leak."

So what are the “Twitter Files?”

Twitter's decision to bury the story became a political scandal, and new CEO Elon Musk promised an explanation. The Twitter Files, named after Facebook leaks.

Musk promised exclusive details of "what really happened" with Hunter Biden late Friday afternoon. The tweet was punctuated with a popcorn emoji.

Explaining Twitter Files

Three hours later, journalist Matt Taibbi tweeted more than three dozen tweets based on internal Twitter documents that revealed "a Frankensteinian tale of a human-built mechanism grown out of its designer's control."

Musk sees this release as a way to shape Twitter's public perception and internal culture in his image. We don't know if the CEO gave Taibbi the documents. Musk hyped the document dump before and during publication, but Taibbi cited "internal sources."

Taibbi shares email screenshots showing Twitter execs discussing the Post story and blocking its distribution. Taibbi says the emails show Twitter's "extraordinary steps" to bury the story.

Twitter communications chief Brandon Borrman has the most damning quote in the Files. Can we say this is policy? The story seemed unbelievable. It seemed like a hack... or not? Could Twitter, which ex-CEO Dick Costolo called "the free speech wing of the free speech party," censor a news story?

Many on the right say the Twitter Files prove the company acted at the behest of Democrats. Both parties had these tools, writes Taibbi. In 2020, both the Trump White House and Biden campaign made requests. He says the system for reporting tweets for deletion is unbalanced because Twitter employees' political donations favor Democrats. Perhaps. These donations may have helped Democrats connect with Twitter staff, but it's also possible they didn't. No emails in Taibbi's cache show these alleged illicit relations or any actions Twitter employees took as a result.

Even Musk's supporters were surprised by the drop. Miranda Devine of the New York Post told Tucker Carlson the documents weren't "the smoking gun we'd hoped for." Sebastian Gorka said on Truth Social, "So far, I'm deeply underwhelmed." DC Democrats collude with Palo Alto Democrats. Whoop!” The Washington Free Beacon's Joe Simonson said the Twitter files are "underwhelming." Twitter was staffed by Democrats who did their bidding. (Why?)

If "The Twitter Files" matter, why?

These emails led Twitter to suppress the Hunter Biden laptop story has real news value. It's rare for a large and valuable company like Twitter to address wrongdoing so thoroughly. Emails resemble FOIA documents. They describe internal drama at a company with government-level power. Katie Notopoulos tweeted, "Any news outlet would've loved this scoop!" It's not a'scandal' as teased."

Twitter's new owner calls it "the de facto public town square," implying public accountability. Like a government agency. Though it's exciting to receive once-hidden documents in response to a FOIA, they may be boring and tell you nothing new. Like Twitter files. We learned how Twitter blocked the Post's story, but not why. Before these documents were released, we knew Twitter had suppressed the story and who was involved.

These people were disciplined and left Twitter. Musk fired Vijaya Gadde, the former CLO who reportedly played a "key role" in the decision. Roth quit over Musk's "dictatorship." Musk arrived after Borrman left. Jack Dorsey, then-CEO, has left. Did those who digitally quarantined the Post's story favor Joe Biden and the Democrats? Republican Party opposition and Trump hatred? New York Post distaste? According to our documents, no. Was there political and press interference? True. We knew.

Taibbi interviewed anonymous ex-Twitter employees about the decision; all expressed shock and outrage. One source said, "Everyone knew this was fucked." Since Taibbi doesn't quote that expletive, we can assume the leaked emails contained few or no sensational quotes. These executives said little to support nefarious claims.

Outlets more invested in the Hunter Biden story than Gizmodo seem vexed by the release and muted headlines. The New York Post, which has never shied away from a blaring headline in its 221-year history, owns the story of Hunter Biden's laptop. Two Friday-night Post alerts about Musk's actions were restrained. Elon Musk will drop Twitter files on NY Post-Hunter Biden laptop censorship today. Elon Musk's Twitter dropped Post censorship details from Biden's laptop. Fox News' Apple News push alert read, "Elon Musk drops Twitter censorship documents."

Bombshell, bombshell, bombshell… what, exactly, is the bombshell? Maybe we've heard this story too much and are missing the big picture. Maybe these documents detail a well-documented decision.

The Post explains why on its website. "Hunter Biden laptop bombshell: Twitter invented reason to censor Post's reporting," its headline says.

Twitter's ad hoc decision to moderate a tabloid's content is not surprising. The social network had done this for years as it battled toxic users—violent white nationalists, virulent transphobes, harassers and bullies of all political stripes, etc. No matter how much Musk crows, the company never had content moderation under control. Buzzfeed's 2016 investigation showed how Twitter has struggled with abusive posters since 2006. Jack Dorsey and his executives improvised, like Musk.

Did the US government interfere with the ex-social VP's media company? That's shocking, a bombshell. Musk said Friday, "Twitter suppressing free speech by itself is not a 1st amendment violation, but acting under government orders with no judicial review is." Indeed! Taibbi believed this. August 2022: "The laptop is secondary." Zeynep Tufecki, a Columbia professor and New York Times columnist, says the FBI is cutting true story distribution. Taibbi retracted the claim Friday night: "I've seen no evidence of government involvement in the laptop story."

What’s the bottom line?

I'm still not sure what's at stake in the Hunter Biden scandal after dozens of New York Post articles, hundreds of hours of Fox News airtime, and thousands of tweets. Briefly: Joe Biden's son left his laptop with a questionable repairman. FBI confiscated it? The repairman made a copy and gave it to Rudy Giuliani's lawyer. The Post got it from Steve Bannon. On that laptop were videos of Hunter Biden smoking crack, cavorting with prostitutes, and emails about introducing his father to a Ukrainian businessman for $50,000 a month. Joe Biden urged Ukraine to fire a prosecutor investigating the company. What? The story seems to be about Biden family business dealings, right?

The discussion has moved past that point anyway. Now, the story is the censorship of it. Adrienne Rich wrote in "Diving Into the Wreck" that she came for "the wreck and not the story of the wreck" No matter how far we go, Hunter Biden's laptop is done. Now, the crash's story matters.

I'm dizzy. Katherine Miller of BuzzFeed wrote, "I know who I believe, and you probably do, too. To believe one is to disbelieve the other, which implicates us in the decision; we're stuck." I'm stuck. Hunter Biden's laptop is a political fabrication. You choose. I've decided.

This could change. Twitter Files drama continues. Taibbi said, "Much more to come." I'm dizzy.