More on Marketing

Sammy Abdullah

3 years ago

How to properly price SaaS

Price Intelligently put out amazing content on pricing your SaaS product. This blog's link to the whole report is worth reading. Our key takeaways are below.

Don't base prices on the competition. Competitor-based pricing has clear drawbacks. Their pricing approach is yours. Your company offers customers something unique. Otherwise, you wouldn't create it. This strategy is static, therefore you can't add value by raising prices without outpricing competitors. Look, but don't touch is the competitor-based moral. You want to know your competitors' prices so you're in the same ballpark, but they shouldn't guide your selections. Competitor-based pricing also drives down prices.

Value-based pricing wins. This is customer-based pricing. Value-based pricing looks outward, not inward or laterally at competitors. Your clients are the best source of pricing information. By valuing customer comments, you're focusing on buyers. They'll decide if your pricing and packaging are right. In addition to asking consumers about cost savings or revenue increases, look at data like number of users, usage per user, etc.

Value-based pricing increases prices. As you learn more about the client and your worth, you'll know when and how much to boost rates. Every 6 months, examine pricing.

Cloning top customers. You clone your consumers by learning as much as you can about them and then reaching out to comparable people or organizations. You can't accomplish this without knowing your customers. Segmenting and reproducing them requires as much detail as feasible. Offer pricing plans and feature packages for 4 personas. The top plan should state Contact Us. Your highest-value customers want more advice and support.

Question your 4 personas. What's the one item you can't live without? Which integrations matter most? Do you do analytics? Is support important or does your company self-solve? What's too cheap? What's too expensive?

Not everyone likes per-user pricing. SaaS organizations often default to per-user analytics. About 80% of companies utilizing per-user pricing should use an alternative value metric because their goods don't give more value with more users, so charging for them doesn't make sense.

At least 3:1 LTV/CAC. Break even on the customer within 2 years, and LTV to CAC is greater than 3:1. Because customer acquisition costs are paid upfront but SaaS revenues accrue over time, SaaS companies face an early financial shortfall while paying back the CAC.

ROI should be >20:1. Indeed. Ensure the customer's ROI is 20x the product's cost. Microsoft Office costs $80 a year, but consumers would pay much more to maintain it.

A/B Testing. A/B testing is guessing. When your pricing page varies based on assumptions, you'll upset customers. You don't have enough customers anyway. A/B testing optimizes landing pages, design decisions, and other site features when you know the problem but not pricing.

Don't discount. It cheapens the product, makes it permanent, and increases churn. By discounting, you're ruining your pricing analysis.

Jano le Roux

3 years ago

Here's What I Learned After 30 Days Analyzing Apple's Microcopy

Move people with tiny words.

Apple fanboy here.

Macs are awesome.

Their iPhones rock.

$19 cloths are great.

$999 stands are amazing.

I love Apple's microcopy even more.

It's like the marketing goddess bit into the Apple logo and blessed the world with microcopy.

I took on a 30-day micro-stalking mission.

Every time I caught myself wasting time on YouTube, I had to visit Apple’s website to learn the secrets of the marketing goddess herself.

We've learned. Golden apples are calling.

Cut the friction

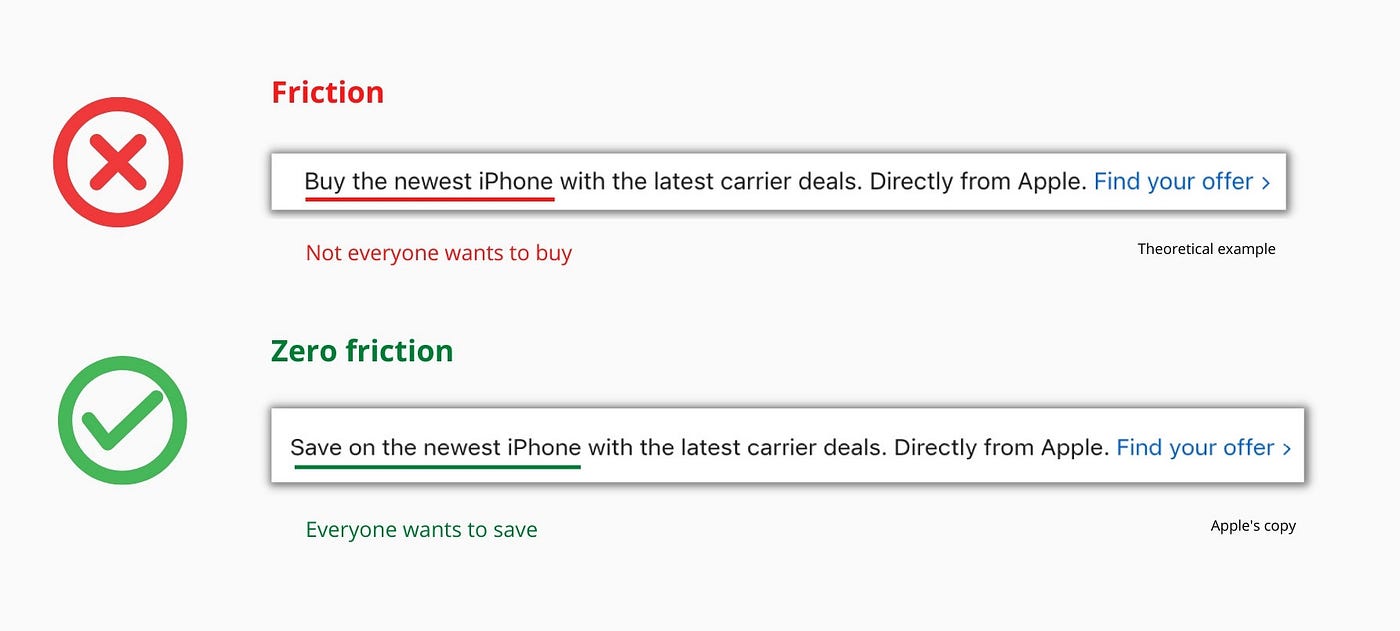

Benefit-first, not commitment-first.

Brands lose customers through friction.

Most brands don't think like customers.

Brands want sales.

Brands want newsletter signups.

Here's their microcopy:

“Buy it now.”

“Sign up for our newsletter.”

Both are difficult. They ask for big commitments.

People are simple creatures. Want pleasure without commitment.

Apple nails this.

So, instead of highlighting the commitment, they highlight the benefit of the commitment.

Saving on the latest iPhone sounds easier than buying it. Everyone saves, but not everyone buys.

A subtle change in framing reduces friction.

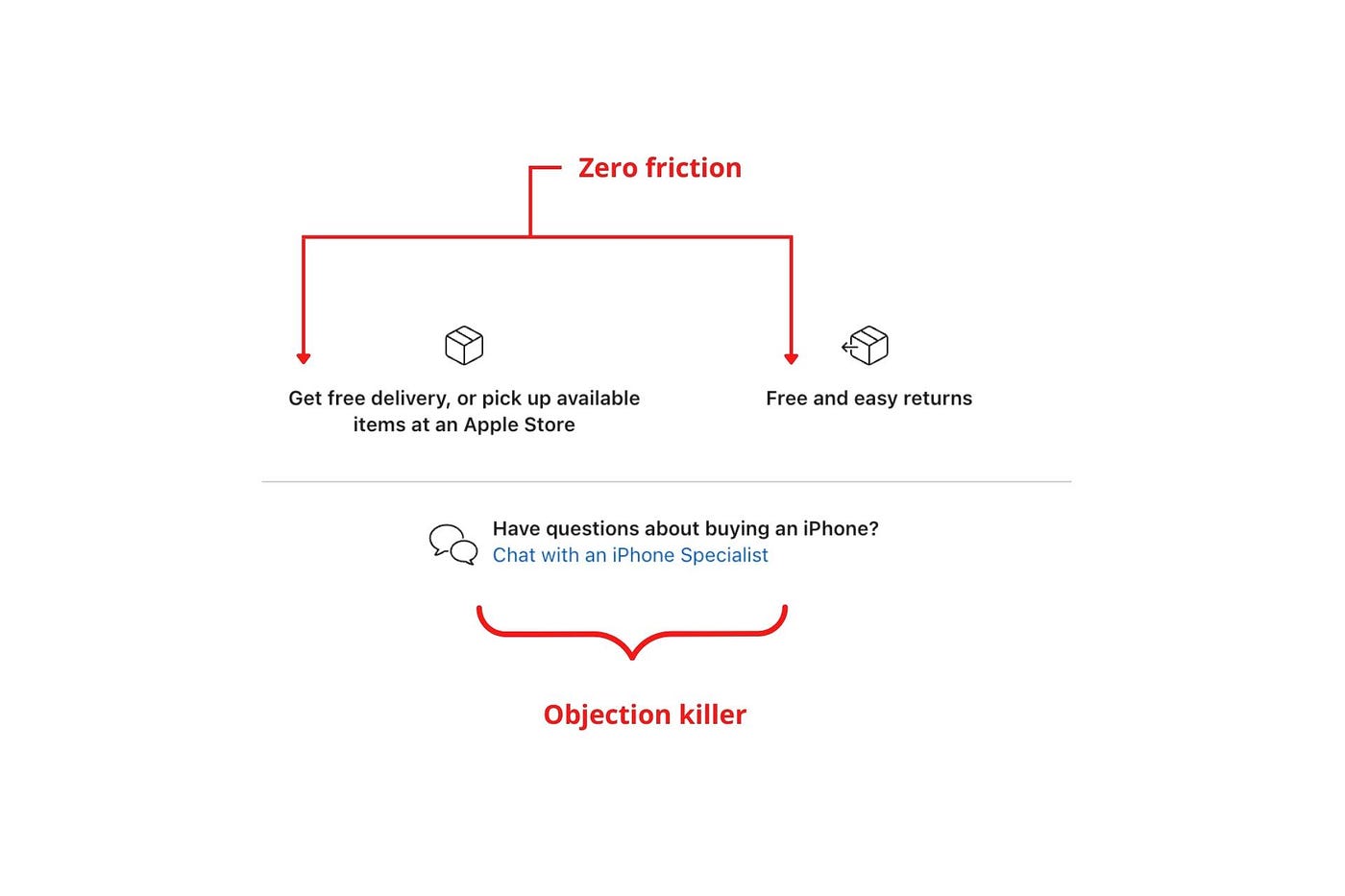

Apple eliminates customer objections to reduce friction.

Less customer friction means simpler processes.

Apple's copy expertly reassures customers about shipping fees and not being home. Apple assures customers that returning faulty products is easy.

Apple knows that talking to a real person is the best way to reduce friction and improve their copy.

Always rhyme

Learn about fine rhyme.

Poets make things beautiful with rhyme.

Copywriters use rhyme to stand out.

Apple’s copywriters have mastered the art of corporate rhyme.

Two techniques are used.

1. Perfect rhyme

Here, rhymes are identical.

2. Imperfect rhyme

Here, rhyming sounds vary.

Apple prioritizes meaning over rhyme.

Apple never forces rhymes that don't fit.

It fits so well that the copy seems accidental.

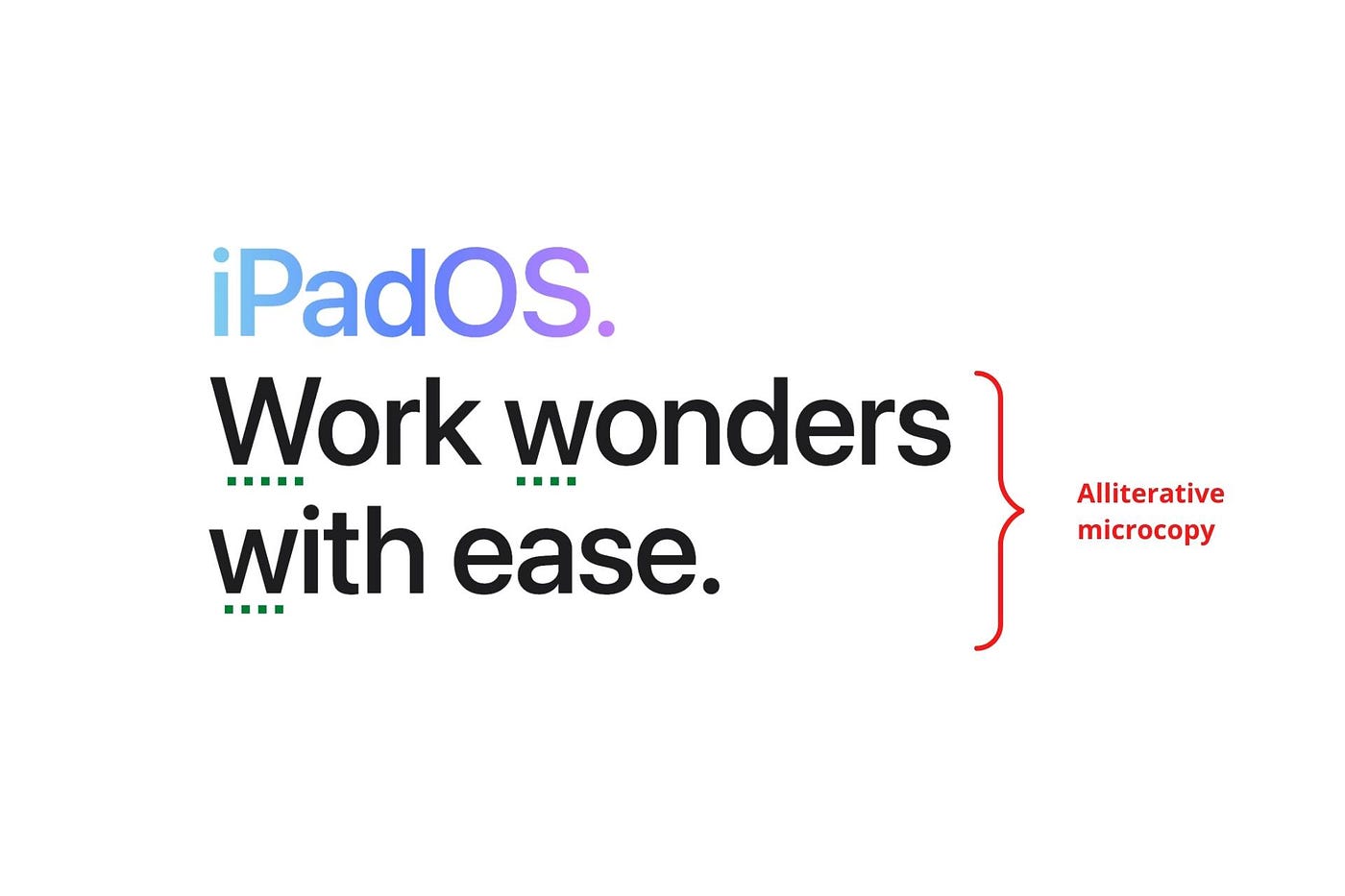

Add alliteration

Alliteration always entertains.

Alliteration repeats initial sounds in nearby words.

Apple's copy uses alliteration like no other brand I've seen to create a rhyming effect or make the text more fun to read.

For example, in the sentence "Sam saw seven swans swimming," the initial "s" sound is repeated five times. This creates a pleasing rhythm.

Microcopy overuse is like pouring ketchup on a Michelin-star meal.

Alliteration creates a memorable phrase in copywriting. It's subtler than rhyme, and most people wouldn't notice; it simply resonates.

I love how Apple uses alliteration and contrast between "wonders" and "ease".

Assonance, or repeating vowels, isn't Apple's thing.

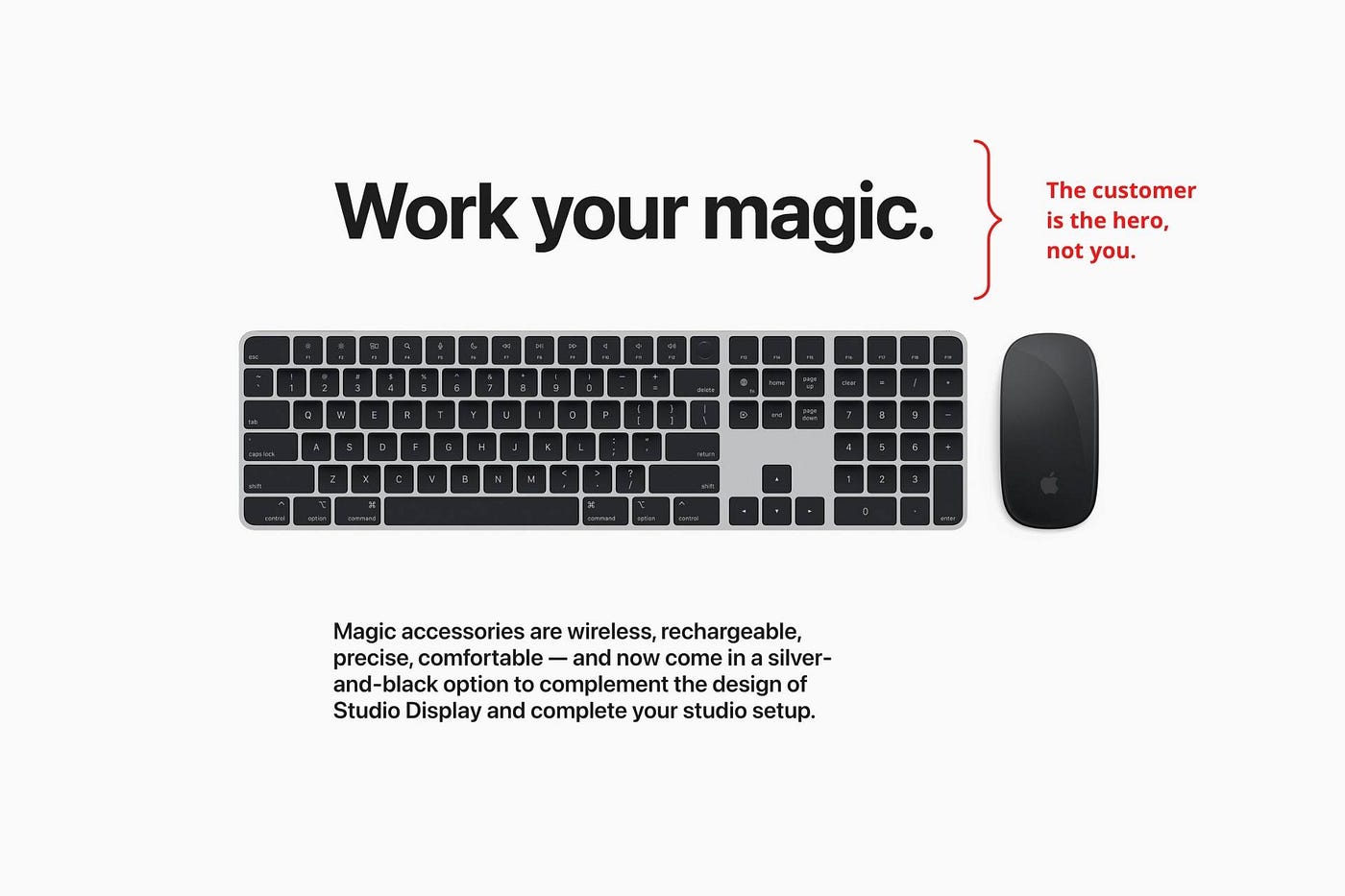

You ≠ Hero, Customer = Hero

Your brand shouldn't be the hero.

Because they'll be using your product or service, your customer should be the hero of your copywriting. With your help, they should feel like they can achieve their goals.

I love how Apple emphasizes what you can do with the machine in this microcopy.

It's divine how they position their tools as sidekicks to help below.

This one takes the cake:

Dialogue-style writing

Conversational copy engages.

Excellent copy Like sharing gum with a friend.

This helps build audience trust.

Apple does this by using natural connecting words like "so" and phrases like "But that's not all."

Snowclone-proof

The mother of all microcopy techniques.

A snowclone uses an existing phrase or sentence to create a new one. The new phrase or sentence uses the same structure but different words.

It’s usually a well know saying like:

To be or not to be.

This becomes a formula:

To _ or not to _.

Copywriters fill in the blanks with cause-related words. Example:

To click or not to click.

Apple turns "survival of the fittest" into "arrival of the fittest."

It's unexpected and surprises the reader.

So this was fun.

But my fun has just begun.

Microcopy is 21st-century poetry.

I came as an Apple fanboy.

I leave as an Apple fanatic.

Now I’m off to find an apple tree.

Cause you know how it goes.

(Apples, trees, etc.)

This post is a summary. Original post available here.

Joseph Mavericks

3 years ago

You Don't Have to Spend $250 on TikTok Ads Because I Did

900K impressions, 8K clicks, and $$$ orders…

I recently started dropshipping. Now that I own my business and can charge it as a business expense, it feels less like money wasted if it doesn't work. I also made t-shirts to sell. I intended to open a t-shirt store and had many designs on a hard drive. I read that Tiktok advertising had a high conversion rate and low cost because they were new. According to many, the advertising' cost/efficiency ratio would plummet and become as bad as Google or Facebook Ads. Now felt like the moment to try Tiktok marketing and dropshipping. I work in marketing for a SaaS firm and have seen how poorly ads perform. I wanted to try it alone.

I set up $250 and ran advertising for a week. Before that, I made my own products, store, and marketing. In this post, I'll show you my process and results.

Setting up the store

Dropshipping is a sort of retail business in which the manufacturer ships the product directly to the client through an online platform maintained by a seller. The seller takes orders but has no stock. The manufacturer handles all orders. This no-stock concept increases profitability and flexibility.

In my situation, I used previous t-shirt designs to make my own product. I didn't want to handle order fulfillment logistics, so I looked for a way to print my designs on demand, ship them, and handle order tracking/returns automatically. So I found Printful.

I needed to connect my backend and supplier to a storefront so visitors could buy. 99% of dropshippers use Shopify, but I didn't want to master the difficult application. I wanted a one-day project. I'd previously worked with Big Cartel, so I chose them.

Big Cartel doesn't collect commissions on sales, simply a monthly flat price ($9.99 to $19.99 depending on your plan).

After opening a Big Cartel account, I uploaded 21 designs and product shots, then synced each product with Printful.

Developing the ads

I mocked up my designs on cool people photographs from placeit.net, a great tool for creating product visuals when you don't have a studio, camera gear, or models to wear your t-shirts.

I opened an account on the website and had advertising visuals within 2 hours.

Because my designs are simple (black design on white t-shirt), I chose happy, stylish people on plain-colored backdrops. After that, I had to develop an animated slideshow.

Because I'm a graphic designer, I chose to use Adobe Premiere to create animated Tiktok advertising.

Premiere is a fancy video editing application used for more than advertisements. Premiere is used to edit movies, not social media marketing. I wanted this experiment to be quick, so I got 3 social media ad templates from motionarray.com and threw my visuals in. All the transitions and animations were pre-made in the files, so it only took a few hours to compile. The result:

I downloaded 3 different soundtracks for the videos to determine which would convert best.

After that, I opened a Tiktok business account, uploaded my films, and inserted ad info. They went live within one hour.

The (poor) outcomes

As a European company, I couldn't deliver ads in the US. All of my advertisements' material (title, description, and call to action) was in English, hence they continued getting rejected in Europe for countries that didn't speak English. There are a lot of them:

I lost a lot of quality traffic, but I felt that if the images were engaging, people would check out the store and buy my t-shirts. I was wrong.

51,071 impressions on Day 1. 0 orders after 411 clicks

114,053 impressions on Day 2. 1.004 clicks and no orders

Day 3: 987 clicks, 103,685 impressions, and 0 orders

101,437 impressions on Day 4. 0 orders after 963 clicks

115,053 impressions on Day 5. 1,050 clicks and no purchases

125,799 impressions on day 6. 1,184 clicks, no purchases

115,547 impressions on Day 7. 1,050 clicks and no purchases

121,456 impressions on day 8. 1,083 clicks, no purchases

47,586 impressions on Day 9. 419 Clicks. No orders

My overall conversion rate for video advertisements was 0.9%. TikTok's paid ad formats all result in strong engagement rates (ads average 3% to 12% CTR to site), therefore a 1 to 2% CTR should have been doable.

My one-week experiment yielded 8,151 ad clicks but no sales. Even if 0.1% of those clicks converted, I should have made 8 sales. Even companies with horrible web marketing would get one download or trial sign-up for every 8,151 clicks. I knew that because my advertising were in English, I had no impressions in the main EU markets (France, Spain, Italy, Germany), and that this impacted my conversion potential. I still couldn't believe my numbers.

I dug into the statistics and found that Tiktok's stats didn't match my store traffic data.

Looking more closely at the numbers

My ads were approved on April 26 but didn't appear until April 27. My store dashboard showed 440 visitors but 1,004 clicks on Tiktok. This happens often while tracking campaign results since different platforms handle comparable user activities (click, view) differently. In online marketing, residual data won't always match across tools.

My data gap was too large. Even if half of the 1,004 persons who clicked closed their browser or left before the store site loaded, I would have gained 502 visitors. The significant difference between Tiktok clicks and Big Cartel store visits made me suspicious. It happened all week:

Day 1: 440 store visits and 1004 ad clicks

Day 2: 482 store visits, 987 ad clicks

3rd day: 963 hits on ads, 452 store visits

443 store visits and 1,050 ad clicks on day 4.

Day 5: 459 store visits and 1,184 ad clicks

Day 6: 430 store visits and 1,050 ad clicks

Day 7: 409 store visits and 1,031 ad clicks

Day 8: 166 store visits and 418 ad clicks

The disparity wasn't related to residual data or data processing. The disparity between visits and clicks looked regular, but I couldn't explain it.

After the campaign concluded, I discovered all my creative assets (the videos) had a 0% CTR and a $0 expenditure in a separate dashboard. Whether it's a dashboard reporting issue or a budget allocation bug, online marketers shouldn't see this.

Tiktok can present any stats they want on their dashboard, just like any other platform that runs advertisements to promote content to its users. I can't verify that 895,687 individuals saw and clicked on my ad. I invested $200 for what appears to be around 900K impressions, which is an excellent ROI. No one bought a t-shirt, even an unattractive one, out of 900K people?

Would I do it again?

Nope. Whether I didn't make sales because Tiktok inflated the dashboard numbers or because I'm horrible at producing advertising and items that sell, I’ll stick to writing content and making videos. If setting up a business and ads in a few days was all it took to make money online, everyone would do it.

Video advertisements and dropshipping aren't dead. As long as the internet exists, people will click ads and buy stuff. Converting ads and selling stuff takes a lot of work, and I want to focus on other things.

I had always wanted to try dropshipping and I’m happy I did, I just won’t stick to it because that’s not something I’m interested in getting better at.

If I want to sell t-shirts again, I'll avoid Tiktok advertisements and find another route.

You might also like

Nik Nicholas

3 years ago

A simple go-to-market formula

“Poor distribution, not poor goods, is the main reason for failure” — Peter Thiel.

Here's an easy way to conceptualize "go-to-market" for your distribution plan.

One equation captures the concept:

Distribution = Ecosystem Participants + Incentives

Draw your customers' ecosystem. Set aside your goods and consider your consumer's environment. Who do they deal with daily?

First, list each participant. You want an exhaustive list, but here are some broad categories.

In-person media services

Websites

Events\Networks

Financial education and banking

Shops

Staff

Advertisers

Twitter influencers

Draw influence arrows. Who's affected? I'm not just talking about Instagram selfie-posters. Who has access to your consumer and could promote your product if motivated?

The thicker the arrow, the stronger the relationship. Include more "influencers" if needed. Customer ecosystems are complex.

3. Incentivize ecosystem players. “Show me the incentive and I will show you the result.“, says Warren Buffet's business partner Charlie Munger.

Strong distribution strategies encourage others to promote your product to your target market by incentivizing the most prominent players. Incentives can be financial or non-financial.

Financial rewards

Usually, there's money. If you pay Facebook, they'll run your ad. Salespeople close deals for commission. Giving customers bonus credits will encourage referrals.

Most businesses underuse non-financial incentives.

Non-cash incentives

Motivate key influencers without spending money to expand quickly and cheaply. What can you give a client-connector for free?

Here are some ideas:

Are there any other features or services available?

Titles or status? Tinder paid college "ambassadors" for parties to promote its dating service.

Can I get early/free access? Facebook gave a select group of developers "exclusive" early access to their AR platform.

Are you a good host? Pharell performed at YPlan's New York launch party.

Distribution? Apple's iPod earphones are white so others can see them.

Have an interesting story? PR rewards journalists by giving them a compelling story to boost page views.

Prioritize distribution.

More time spent on distribution means more room in your product design and business plan. Once you've identified the key players in your customer's ecosystem, talk to them.

Money isn't your only resource. Creative non-monetary incentives may be more effective and scalable. Give people something useful and easy to deliver.

Matthew Royse

3 years ago

7 ways to improve public speaking

How to overcome public speaking fear and give a killer presentation

"Public speaking is people's biggest fear, according to studies. Death's second. The average person is better off in the casket than delivering the eulogy." — American comedian, actor, writer, and producer Jerry Seinfeld

People fear public speaking, according to research. Public speaking can be intimidating.

Most professions require public speaking, whether to 5, 50, 500, or 5,000 people. Your career will require many presentations. In a small meeting, company update, or industry conference.

You can improve your public speaking skills. You can reduce your anxiety, improve your performance, and feel more comfortable speaking in public.

“If I returned to college, I'd focus on writing and public speaking. Effective communication is everything.” — 38th president Gerald R. Ford

You can deliver a great presentation despite your fear of public speaking. There are ways to stay calm while speaking and become a more effective public speaker.

Seven tips to improve your public speaking today. Let's help you overcome your fear (no pun intended).

Know your audience.

"You're not being judged; the audience is." — Entrepreneur, author, and speaker Seth Godin

Understand your audience before speaking publicly. Before preparing a presentation, know your audience. Learn what they care about and find useful.

Your presentation may depend on where you're speaking. A classroom is different from a company meeting.

Determine your audience before developing your main messages. Learn everything about them. Knowing your audience helps you choose the right words, information (thought leadership vs. technical), and motivational message.

2. Be Observant

Observe others' speeches to improve your own. Watching free TED Talks on education, business, science, technology, and creativity can teach you a lot about public speaking.

What worked and what didn't?

What would you change?

Their strengths

How interesting or dull was the topic?

Note their techniques to learn more. Studying the best public speakers will amaze you.

Learn how their stage presence helped them communicate and captivated their audience. Please note their pauses, humor, and pacing.

3. Practice

"A speaker should prepare based on what he wants to learn, not say." — Author, speaker, and pastor Tod Stocker

Practice makes perfect when it comes to public speaking. By repeating your presentation, you can find your comfort zone.

When you've practiced your presentation many times, you'll feel natural and confident giving it. Preparation helps overcome fear and anxiety. Review notes and important messages.

When you know the material well, you can explain it better. Your presentation preparation starts before you go on stage.

Keep a notebook or journal of ideas, quotes, and examples. More content means better audience-targeting.

4. Self-record

Videotape your speeches. Check yourself. Body language, hands, pacing, and vocabulary should be reviewed.

Best public speakers evaluate their performance to improve.

Write down what you did best, what you could improve and what you should stop doing after watching a recording of yourself. Seeing yourself can be unsettling. This is how you improve.

5. Remove text from slides

"Humans can't read and comprehend screen text while listening to a speaker. Therefore, lots of text and long, complete sentences are bad, bad, bad.” —Communications expert Garr Reynolds

Presentation slides shouldn't have too much text. 100-slide presentations bore the audience. Your slides should preview what you'll say to the audience.

Use slides to emphasize your main point visually.

If you add text, use at least 40-point font. Your slides shouldn't require squinting to read. You want people to watch you, not your slides.

6. Body language

"Body language is powerful." We had body language before speech, and 80% of a conversation is read through the body, not the words." — Dancer, writer, and broadcaster Deborah Bull

Nonverbal communication dominates. Our bodies speak louder than words. Don't fidget, rock, lean, or pace.

Relax your body to communicate clearly and without distraction through nonverbal cues. Public speaking anxiety can cause tense body language.

Maintain posture and eye contact. Don’t put your hand in your pockets, cross your arms, or stare at your notes. Make purposeful hand gestures that match what you're saying.

7. Beginning/ending Strong

Beginning and end are memorable. Your presentation must start strong and end strongly. To engage your audience, don't sound robotic.

Begin with a story, stat, or quote. Conclude with a summary of key points. Focus on how you will start and end your speech.

You should memorize your presentation's opening and closing. Memorize something naturally. Excellent presentations start and end strong because people won't remember the middle.

Bringing It All Together

Seven simple yet powerful ways to improve public speaking. Know your audience, study others, prepare and rehearse, record yourself, remove as much text as possible from slides, and start and end strong.

Follow these tips to improve your speaking and audience communication. Prepare, practice, and learn from great speakers to reduce your fear of public speaking.

"Speaking to one person or a thousand is public speaking." — Vocal coach Roger Love

1eth1da

3 years ago

6 Rules to build a successful NFT Community in 2022

Too much NFT, Discord, and shitposting.

How do you choose?

How do you recruit more members to join your NFT project?

In 2021, a successful NFT project required:

Monkey/ape artwork

Twitter and Discord bot-filled

Roadmap overpromise

Goal was quick cash.

2022 and the years after will change that.

These are 6 Rules for a Strong NFT Community in 2022:

THINK LONG TERM

This relates to roadmap planning. Hype and dumb luck may drive NFT projects (ahem, goblins) but rarely will your project soar.

Instead, consider sustainability.

Plan your roadmap based on your team's abilities.

Do what you're already doing, but with NFTs, make it bigger and better.

You shouldn't copy a project's roadmap just because it was profitable.

This will lead to over-promising, team burnout, and an RUG NFT project.

OFFER VALUE

Building a great community starts with giving.

Why are musicians popular?

Because they offer entertainment for everyone, a random person becomes a fan, and more fans become a cult.

That's how you should approach your community.

TEAM UP

A great team helps.

An NFT project could have 3 or 2 people.

Credibility trumps team size.

Make sure your team can answer community questions, resolve issues, and constantly attend to them.

Don't overwork and burn out.

Your community will be able to recognize that you are trying too hard and give up on the project.

BUILD A GREAT PRODUCT

Bored Ape Yacht Club altered the NFT space.

Cryptopunks transformed NFTs.

Many others did, including Okay Bears.

What made them that way?

Because they answered a key question.

What is my NFT supposed to be?

Before planning art, this question must be answered.

NFTs can't be just jpegs.

What does it represent?

Is it a Metaverse-ready project?

What blockchain are you going to be using and why?

Set some ground rules for yourself. This helps your project's direction.

These questions will help you and your team set a direction for blockchain, NFT, and Web3 technology.

EDUCATE ON WEB3

The more the team learns about Web3 technology, the more they can offer their community.

Think tokens, metaverse, cross-chain interoperability and more.

BUILD A GREAT COMMUNITY

Several projects mistreat their communities.

They treat their community like "customers" and try to sell them NFT.

Providing Whitelists and giveaways aren't your only community-building options.

Think bigger.

Consider them family and friends, not wallets.

Consider them fans.

These are some tips to start your NFT project.