More on NFTs & Art

Jayden Levitt

3 years ago

Starbucks' NFT Project recently defeated its rivals.

The same way Amazon killed bookstores. You just can’t see it yet.

Shultz globalized coffee. Before Starbucks, coffee sucked.

All accounts say 1970s coffee was awful.

Starbucks had three stores selling ground Indonesian coffee in the 1980s.

What a show!

A year after joining the company at 29, Shultz traveled to Italy for R&D.

He noticed the coffee shops' sense of theater and community and realized Starbucks was in the wrong business.

Integrating coffee and destination created a sense of community in the store.

Brilliant!

He told Starbucks' founders about his experience.

They disapproved.

For two years.

Shultz left and opened an Italian coffee shop chain like any good entrepreneur.

Starbucks ran into financial trouble, so the founders offered to sell to Shultz.

Shultz bought Starbucks in 1987 for $3.8 million, including six stores and a payment plan.

Starbucks is worth $100.79Billion, per Google Finance.

26,500 times Shultz's initial investment

Starbucks is releasing its own NFT Platform under Shultz and his early Vision.

This year, Starbucks Odyssey launches. The new digital experience combines a Loyalty Rewards program with NFT.

The side chain Polygon-based platform doesn't require a Crypto Wallet. Customers can earn and buy digital assets to unlock incentives and experiences.

They've removed all friction, making it more immersive and convenient than a coffee shop.

Brilliant!

NFTs are the access coupon to their digital community, but they don't highlight the technology.

They prioritize consumer experience by adding non-technical users to Web3. Their collectables are called journey stamps, not NFTs.

No mention of bundled gas fees.

Brady Brewer, Starbucks' CMO, said;

“It happens to be built on blockchain and web3 technologies, but the customer — to be honest — may very well not even know that what they’re doing is interacting with blockchain technology. It’s just the enabler,”

Rewards members will log into a web app using their loyalty program credentials to access Starbucks Odyssey. They won't know about blockchain transactions.

Starbucks has just dealt its rivals a devastating blow.

It generates more than ten times the revenue of its closest competitor Costa Coffee.

The coffee giant is booming.

Starbucks is ahead of its competitors. No wonder.

They have an innovative, adaptable leadership team.

Starbucks' DNA challenges the narrative, especially when others reject their ideas.

I’m off for a cappuccino.

Vishal Chawla

3 years ago

5 Bored Apes borrowed to claim $1.1 million in APE tokens

Takeaway

Unknown user took advantage of the ApeCoin airdrop to earn $1.1 million.

He used a flash loan to borrow five BAYC NFTs, claim the airdrop, and repay the NFTs.

Yuga Labs, the creators of BAYC, airdropped ApeCoin (APE) to anyone who owns one of their NFTs yesterday.

For the Bored Ape Yacht Club and Mutant Ape Yacht Club collections, the team allocated 150 million tokens, or 15% of the total ApeCoin supply, worth over $800 million. Each BAYC holder received 10,094 tokens worth $80,000 to $200,000.

But someone managed to claim the airdrop using NFTs they didn't own. They used the airdrop's specific features to carry it out. And it worked, earning them $1.1 million in ApeCoin.

The trick was that the ApeCoin airdrop wasn't based on who owned which Bored Ape at a given time. Instead, anyone with a Bored Ape at the time of the airdrop could claim it. So if you gave someone your Bored Ape and you hadn't claimed your tokens, they could claim them.

The person only needed to get hold of some Bored Apes that hadn't had their tokens claimed to claim the airdrop. They could be returned immediately.

So, what happened?

The person found a vault with five Bored Ape NFTs that hadn't been used to claim the airdrop.

A vault tokenizes an NFT or a group of NFTs. You put a bunch of NFTs in a vault and make a token. This token can then be staked for rewards or sold (representing part of the value of the collection of NFTs). Anyone with enough tokens can exchange them for NFTs.

This vault uses the NFTX protocol. In total, it contained five Bored Apes: #7594, #8214, #9915, #8167, and #4755. Nobody had claimed the airdrop because the NFTs were locked up in the vault and not controlled by anyone.

The person wanted to unlock the NFTs to claim the airdrop but didn't want to buy them outright s o they used a flash loan, a common tool for large DeFi hacks. Flash loans are a low-cost way to borrow large amounts of crypto that are repaid in the same transaction and block (meaning that the funds are never at risk of not being repaid).

With a flash loan of under $300,000 they bought a Bored Ape on NFT marketplace OpenSea. A large amount of the vault's token was then purchased, allowing them to redeem the five NFTs. The NFTs were used to claim the airdrop, before being returned, the tokens sold back, and the loan repaid.

During this process, they claimed 60,564 ApeCoin airdrops. They then sold them on Uniswap for 399 ETH ($1.1 million). Then they returned the Bored Ape NFT used as collateral to the same NFTX vault.

Attack or arbitrage?

However, security firm BlockSecTeam disagreed with many social media commentators. A flaw in the airdrop-claiming mechanism was exploited, it said.

According to BlockSecTeam's analysis, the user took advantage of a "vulnerability" in the airdrop.

"We suspect a hack due to a flaw in the airdrop mechanism. The attacker exploited this vulnerability to profit from the airdrop claim" said BlockSecTeam.

For example, the airdrop could have taken into account how long a person owned the NFT before claiming the reward.

Because Yuga Labs didn't take a snapshot, anyone could buy the NFT in real time and claim it. This is probably why BAYC sales exploded so soon after the airdrop announcement.

Alex Carter

3 years ago

Metaverse, Web 3, and NFTs are BS

Most crypto is probably too.

The goals of Web 3 and the metaverse are admirable and attractive. Who doesn't want an internet owned by users? Who wouldn't want a digital realm where anything is possible? A better way to collaborate and visit pals.

Companies pursue profits endlessly. Infinite growth and revenue are expected, and if a corporation needs to sacrifice profits to safeguard users, the CEO, board of directors, and any executives will lose to the system of incentives that (1) retains workers with shares and (2) makes a company answerable to all of its shareholders. Only the government can guarantee user protections, but we know how successful that is. This is nothing new, just a problem with modern capitalism and tech platforms that a user-owned internet might remedy. Moxie, the founder of Signal, has a good articulation of some of these current Web 2 tech platform problems (but I forget the timestamp); thoughts on JRE aside, this episode is worth listening to (it’s about a bunch of other stuff too).

Moxie Marlinspike, founder of Signal, on the Joe Rogan Experience podcast.

Source: https://open.spotify.com/episode/2uVHiMqqJxy8iR2YB63aeP?si=4962b5ecb1854288

Web 3 champions are premature. There was so much spectacular growth during Web 2 that the next wave of founders want to make an even bigger impact, while investors old and new want a chance to get a piece of the moonshot action. Worse, crypto enthusiasts believe — and financially need — the fact of its success to be true, whether or not it is.

I’m doubtful that it will play out like current proponents say. Crypto has been the white-hot focus of SV’s best and brightest for a long time yet still struggles to come up any mainstream use case other than ‘buy, HODL, and believe’: a store of value for your financial goals and wishes. Some kind of the metaverse is likely, but will it be decentralized, mostly in VR, or will Meta (previously FB) play a big role? Unlikely.

METAVERSE

The metaverse exists already. Our digital lives span apps, platforms, and games. I can design a 3D house, invite people, use Discord, and hang around in an artificial environment. Millions of gamers do this in Rust, Minecraft, Valheim, and Animal Crossing, among other games. Discord's voice chat and Slack-like servers/channels are the present social anchor, but the interface, integrations, and data portability will improve. Soon you can stream YouTube videos on digital house walls. You can doodle, create art, play Jackbox, and walk through a door to play Apex Legends, Fortnite, etc. Not just gaming. Digital whiteboards and screen sharing enable real-time collaboration. They’ll review code and operate enterprises. Music is played and made. In digital living rooms, they'll watch movies, sports, comedy, and Twitch. They'll tweet, laugh, learn, and shittalk.

The metaverse is the evolution of our digital life at home, the third place. The closest analog would be Discord and the integration of Facebook, Slack, YouTube, etc. into a single, 3D, customizable hangout space.

I'm not certain this experience can be hugely decentralized and smoothly choreographed, managed, and run, or that VR — a luxury, cumbersome, and questionably relevant technology — must be part of it. Eventually, VR will be pragmatic, achievable, and superior to real life in many ways. A total sensory experience like the Matrix or Sword Art Online, where we're physically hooked into the Internet yet in our imaginations we're jumping, flying, and achieving athletic feats we never could in reality; exploring realms far grander than our own (as grand as it is). That VR is different from today's.

Ben Thompson released an episode of Exponent after Facebook changed its name to Meta. Ben was suspicious about many metaverse champion claims, but he made a good analogy between Oculus and the PC. The PC was initially far too pricey for the ordinary family to afford. It began as a business tool. It got so powerful and pervasive that it affected our personal life. Price continues to plummet and so much consumer software was produced that it's impossible to envision life without a home computer (or in our pockets). If Facebook shows product market fit with VR in business, through use cases like remote work and collaboration, maybe VR will become practical in our personal lives at home.

Before PCs, we relied on Blockbuster, the Yellow Pages, cabs to get to the airport, handwritten taxes, landline phones to schedule social events, and other archaic methods. It is impossible for me to conceive what VR, in the form of headsets and hand controllers, stands to give both professional and especially personal digital experiences that is an order of magnitude better than what we have today. Is looking around better than using a mouse to examine a 3D landscape? Do the hand controls make x10 or x100 work or gaming more fun or efficient? Will VR replace scalable Web 2 methods and applications like Web 1 and Web 2 did for analog? I don't know.

My guess is that the metaverse will arrive slowly, initially on displays we presently use, with more app interoperability. I doubt that it will be controlled by the people or by Facebook, a corporation that struggles to properly innovate internally, as practically every large digital company does. Large tech organizations are lousy at hiring product-savvy employees, and if they do, they rarely let them explore new things.

These companies act like business schools when they seek founders' results, with bureaucracy and dependency. Which company launched the last popular consumer software product that wasn't a clone or acquisition? Recent examples are scarce.

Web 3

Investors and entrepreneurs of Web 3 firms are declaring victory: 'Web 3 is here!' Web 3 is the future! Many profitable Web 2 enterprises existed when Web 2 was defined. The word was created to explain user behavior shifts, not a personal pipe dream.

Origins of Web 2: http://www.oreilly.com/pub/a/web2/archive/what-is-web-20.html

One of these Web 3 startups may provide the connecting tissue to link all these experiences or become one of the major new digital locations. Even so, successful players will likely use centralized power arrangements, as Web 2 businesses do now. Some Web 2 startups integrated our digital lives. Rockmelt (2010–2013) was a customizable browser with bespoke connectors to every program a user wanted; imagine seeing Facebook, Twitter, Discord, Netflix, YouTube, etc. all in one location. Failure. Who knows what Opera's doing?

Silicon Valley and tech Twitter in general have a history of jumping on dumb bandwagons that go nowhere. Dot-com crash in 2000? The huge deployment of capital into bad ideas and businesses is well-documented. And live video. It was the future until it became a niche sector for gamers. Live audio will play out a similar reality as CEOs with little comprehension of audio and no awareness of lasting new user behavior deceive each other into making more and bigger investments on fool's gold. Twitter trying to buy Clubhouse for $4B, Spotify buying Greenroom, Facebook exploring live audio and 'Tiktok for audio,' and now Amazon developing a live audio platform. This live audio frenzy won't be worth their time or energy. Blind guides blind. Instead of learning from prior failures like Twitter buying Periscope for $100M pre-launch and pre-product market fit, they're betting on unproven and uncompelling experiences.

NFTs

NFTs are also nonsense. Take Loot, a time-limited bag drop of "things" (text on the blockchain) for a game that didn't exist, bought by rich techies too busy to play video games and foolish enough to think they're getting in early on something with a big reward. What gaming studio is incentivized to use these items? Who's encouraged to join? No one cares besides Loot owners who don't have NFTs. Skill, merit, and effort should be rewarded with rare things for gamers. Even if a small minority of gamers can make a living playing, the average game's major appeal has never been to make actual money - that's a profession.

No game stays popular forever, so how is this objective sustainable? Once popularity and usage drop, exclusive crypto or NFTs will fall. And if NFTs are designed to have cross-game appeal, incentives apart, 30 years from now any new game will need millions of pre-existing objects to build around before they start. It doesn’t work.

Many games already feature item economies based on real in-game scarcity, generally for cosmetic things to avoid pay-to-win, which undermines scaled gaming incentives for huge player bases. Counter-Strike, Rust, etc. may be bought and sold on Steam with real money. Since the 1990s, unofficial cross-game marketplaces have sold in-game objects and currencies. NFTs aren't needed. Making a popular, enjoyable, durable game is already difficult.

With NFTs, certain JPEGs on the internet went from useless to selling for $69 million. Why? Crypto, Web 3, early Internet collectibles. NFTs are digital Beanie Babies (unlike NFTs, Beanie Babies were a popular children's toy; their destinies are the same). NFTs are worthless and scarce. They appeal to crypto enthusiasts seeking for a practical use case to support their theory and boost their own fortune. They also attract to SV insiders desperate not to miss the next big thing, not knowing what it will be. NFTs aren't about paying artists and creators who don't get credit for their work.

South Park's Underpants Gnomes

NFTs are a benign, foolish plan to earn money on par with South Park's underpants gnomes. At worst, they're the world of hucksterism and poor performers. Or those with money and enormous followings who, like everyone, don't completely grasp cryptocurrencies but are motivated by greed and status and believe Gary Vee's claim that CryptoPunks are the next Facebook. Gary's watertight logic: if NFT prices dip, they're on the same path as the most successful corporation in human history; buy the dip! NFTs aren't businesses or museum-worthy art. They're bs.

Gary Vee compares NFTs to Amazon.com. vm.tiktok.com/TTPdA9TyH2

We grew up collecting: Magic: The Gathering (MTG) cards printed in the 90s are now worth over $30,000. Imagine buying a digital Magic card with no underlying foundation. No one plays the game because it doesn't exist. An NFT is a contextless image someone conned you into buying a certificate for, but anyone may copy, paste, and use. Replace MTG with Pokemon for younger readers.

When Gary Vee strongarms 30 tech billionaires and YouTube influencers into buying CryptoPunks, they'll talk about it on Twitch, YouTube, podcasts, Twitter, etc. That will convince average folks that the product has value. These guys are smart and/or rich, so I'll get in early like them. Cryptography is similar. No solid, scaled, mainstream use case exists, and no one knows where it's headed, but since the global crypto financial bubble hasn't burst and many people have made insane fortunes, regular people are putting real money into something that is highly speculative and could be nothing because they want a piece of the action. Who doesn’t want free money? Rich techies and influencers won't be affected; normal folks will.

Imagine removing every $1 invested in Bitcoin instantly. What would happen? How far would Bitcoin fall? Over 90%, maybe even 95%, and Bitcoin would be dead. Bitcoin as an investment is the only scalable widespread use case: it's confidence that a better use case will arise and that being early pays handsomely. It's like pouring a trillion dollars into a company with no business strategy or users and a CEO who makes vague future references.

New tech and efforts may provoke a 'get off my lawn' mentality as you approach 40, but I've always prided myself on having a decent bullshit detector, and it's flying off the handle at this foolishness. If we can accomplish a functional, responsible, equitable, and ethical user-owned internet, I'm for it.

Postscript:

I wanted to summarize my opinions because I've been angry about this for a while but just sporadically tweeted about it. A friend handed me a Dan Olson YouTube video just before publication. He's more knowledgeable, articulate, and convincing about crypto. It's worth seeing:

This post is a summary. See the original one here.

You might also like

Sam Hickmann

3 years ago

What is this Fed interest rate everybody is talking about that makes or breaks the stock market?

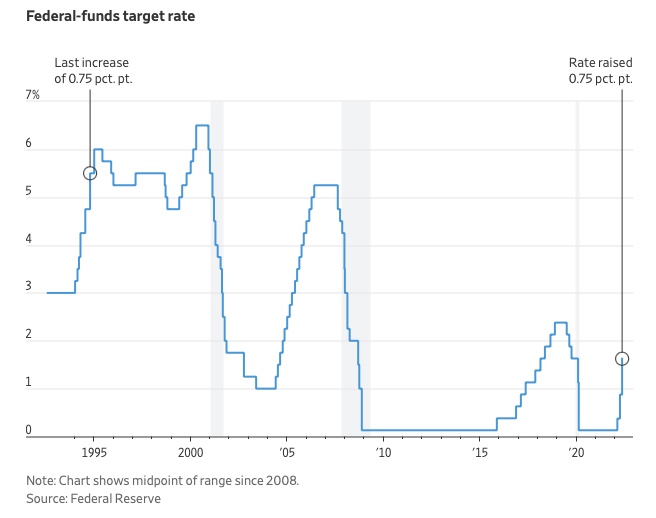

The Federal Funds Rate (FFR) is the target interest rate set by the Federal Reserve System (Fed)'s policy-making body (FOMC). This target is the rate at which the Fed suggests commercial banks borrow and lend their excess reserves overnight to each other.

The FOMC meets 8 times a year to set the target FFR. This is supposed to promote economic growth. The overnight lending market sets the actual rate based on commercial banks' short-term reserves. If the market strays too far, the Fed intervenes.

Banks must keep a certain percentage of their deposits in a Federal Reserve account. A bank's reserve requirement is a percentage of its total deposits. End-of-day bank account balances averaged over two-week reserve maintenance periods are used to determine reserve requirements.

If a bank expects to have end-of-day balances above what's needed, it can lend the excess to another institution.

The FOMC adjusts interest rates based on economic indicators that show inflation, recession, or other issues that affect economic growth. Core inflation and durable goods orders are indicators.

In response to economic conditions, the FFR target has changed over time. In the early 1980s, inflation pushed it to 20%. During the Great Recession of 2007-2009, the rate was slashed to 0.15 percent to encourage growth.

Inflation picked up in May 2022 despite earlier rate hikes, prompting today's 0.75 percent point increase. The largest increase since 1994. It might rise to around 3.375% this year and 3.1% by the end of 2024.

Elnaz Sarraf

3 years ago

Why Bitcoin's Crash Could Be Good for Investors

The crypto market crashed in June 2022. Bitcoin and other cryptocurrencies hit their lowest prices in over a year, causing market panic. Some believe this crash will benefit future investors.

Before I discuss how this crash might help investors, let's examine why it happened. Inflation in the U.S. reached a 30-year high in 2022 after Russia invaded Ukraine. In response, the U.S. Federal Reserve raised interest rates by 0.5%, the most in almost 20 years. This hurts cryptocurrencies like Bitcoin. Higher interest rates make people less likely to invest in volatile assets like crypto, so many investors sold quickly.

The crypto market collapsed. Bitcoin, Ethereum, and Binance dropped 40%. Other cryptos crashed so hard they were delisted from almost every exchange. Bitcoin peaked in April 2022 at $41,000, but after the May interest rate hike, it crashed to $28,000. Bitcoin investors were worried. Even in bad times, this crash is unprecedented.

Bitcoin wasn't "doomed." Before the crash, LUNA was one of the top 5 cryptos by market cap. LUNA was trading around $80 at the start of May 2022, but after the rate hike?

Less than 1 cent. LUNA lost 99.99% of its value in days and was removed from every crypto exchange. Bitcoin's "crash" isn't as devastating when compared to LUNA.

Many people said Bitcoin is "due" for a LUNA-like crash and that the only reason it hasn't crashed is because it's bigger. Still false. If so, Bitcoin should be worth zero by now. We didn't. Instead, Bitcoin reached 28,000, then 29k, 30k, and 31k before falling to 18k. That's not the world's greatest recovery, but it shows Bitcoin's safety.

Bitcoin isn't falling constantly. It fell because of the initial shock of interest rates, but not further. Now, Bitcoin's value is more likely to rise than fall. Bitcoin's low price also attracts investors. They know what prices Bitcoin can reach with enough hype, and they want to capitalize on low prices before it's too late.

Bitcoin's crash was bad, but in a way it wasn't. To understand, consider 2021. In March 2021, Bitcoin surpassed $60k for the first time. Elon Musk's announcement in May that he would no longer support Bitcoin caused a massive crash in the crypto market. In May 2017, Bitcoin's price hit $29,000. Elon Musk's statement isn't worth more than the Fed raising rates. Many expected this big announcement to kill Bitcoin.

Not so. Bitcoin crashed from $58k to $31k in 2021. Bitcoin fell from $41k to $28k in 2022. This crash is smaller. Bitcoin's price held up despite tensions and stress, proving investors still believe in it. What happened after the initial crash in the past?

Bitcoin fell until mid-July. This is also something we’re not seeing today. After a week, Bitcoin began to improve daily. Bitcoin's price rose after mid-July. Bitcoin's price fluctuated throughout the rest of 2021, but it topped $67k in November. Despite no major changes, the peak occurred after the crash. Elon Musk seemed uninterested in crypto and wasn't likely to change his mind soon. What triggered this peak? Nothing, really. What really happened is that people got over the initial statement. They forgot.

Internet users have goldfish-like attention spans. People quickly forgot the crash's cause and were back investing in crypto months later. Despite the market's setbacks, more crypto investors emerged by the end of 2017. Who gained from these peaks? Bitcoin investors who bought low. Bitcoin not only recovered but also doubled its ROI. It was like a movie, and it shows us what to expect from Bitcoin in the coming months.

The current Bitcoin crash isn't as bad as the last one. LUNA is causing market panic. LUNA and Bitcoin are different cryptocurrencies. LUNA crashed because Terra wasn’t able to keep its peg with the USD. Bitcoin is unanchored. It's one of the most decentralized investments available. LUNA's distrust affected crypto prices, including Bitcoin, but it won't last forever.

This is why Bitcoin will likely rebound in the coming months. In 2022, people will get over the rise in interest rates and the crash of LUNA, just as they did with Elon Musk's crypto stance in 2021. When the world moves on to the next big controversy, Bitcoin's price will soar.

Bitcoin may recover for another reason. Like controversy, interest rates fluctuate. The Russian invasion caused this inflation. World markets will stabilize, prices will fall, and interest rates will drop.

Next, lower interest rates could boost Bitcoin's price. Eventually, it will happen. The U.S. economy can't sustain such high interest rates. Investors will put every last dollar into Bitcoin if interest rates fall again.

Bitcoin has proven to be a stable investment. This boosts its investment reputation. Even if Ethereum dethrones Bitcoin as crypto king one day (or any other crypto, for that matter). Bitcoin may stay on top of the crypto ladder for a while. We'll have to wait a few months to see if any of this is true.

This post is a summary. Read the full article here.

Joseph Mavericks

3 years ago

You Don't Have to Spend $250 on TikTok Ads Because I Did

900K impressions, 8K clicks, and $$$ orders…

I recently started dropshipping. Now that I own my business and can charge it as a business expense, it feels less like money wasted if it doesn't work. I also made t-shirts to sell. I intended to open a t-shirt store and had many designs on a hard drive. I read that Tiktok advertising had a high conversion rate and low cost because they were new. According to many, the advertising' cost/efficiency ratio would plummet and become as bad as Google or Facebook Ads. Now felt like the moment to try Tiktok marketing and dropshipping. I work in marketing for a SaaS firm and have seen how poorly ads perform. I wanted to try it alone.

I set up $250 and ran advertising for a week. Before that, I made my own products, store, and marketing. In this post, I'll show you my process and results.

Setting up the store

Dropshipping is a sort of retail business in which the manufacturer ships the product directly to the client through an online platform maintained by a seller. The seller takes orders but has no stock. The manufacturer handles all orders. This no-stock concept increases profitability and flexibility.

In my situation, I used previous t-shirt designs to make my own product. I didn't want to handle order fulfillment logistics, so I looked for a way to print my designs on demand, ship them, and handle order tracking/returns automatically. So I found Printful.

I needed to connect my backend and supplier to a storefront so visitors could buy. 99% of dropshippers use Shopify, but I didn't want to master the difficult application. I wanted a one-day project. I'd previously worked with Big Cartel, so I chose them.

Big Cartel doesn't collect commissions on sales, simply a monthly flat price ($9.99 to $19.99 depending on your plan).

After opening a Big Cartel account, I uploaded 21 designs and product shots, then synced each product with Printful.

Developing the ads

I mocked up my designs on cool people photographs from placeit.net, a great tool for creating product visuals when you don't have a studio, camera gear, or models to wear your t-shirts.

I opened an account on the website and had advertising visuals within 2 hours.

Because my designs are simple (black design on white t-shirt), I chose happy, stylish people on plain-colored backdrops. After that, I had to develop an animated slideshow.

Because I'm a graphic designer, I chose to use Adobe Premiere to create animated Tiktok advertising.

Premiere is a fancy video editing application used for more than advertisements. Premiere is used to edit movies, not social media marketing. I wanted this experiment to be quick, so I got 3 social media ad templates from motionarray.com and threw my visuals in. All the transitions and animations were pre-made in the files, so it only took a few hours to compile. The result:

I downloaded 3 different soundtracks for the videos to determine which would convert best.

After that, I opened a Tiktok business account, uploaded my films, and inserted ad info. They went live within one hour.

The (poor) outcomes

As a European company, I couldn't deliver ads in the US. All of my advertisements' material (title, description, and call to action) was in English, hence they continued getting rejected in Europe for countries that didn't speak English. There are a lot of them:

I lost a lot of quality traffic, but I felt that if the images were engaging, people would check out the store and buy my t-shirts. I was wrong.

51,071 impressions on Day 1. 0 orders after 411 clicks

114,053 impressions on Day 2. 1.004 clicks and no orders

Day 3: 987 clicks, 103,685 impressions, and 0 orders

101,437 impressions on Day 4. 0 orders after 963 clicks

115,053 impressions on Day 5. 1,050 clicks and no purchases

125,799 impressions on day 6. 1,184 clicks, no purchases

115,547 impressions on Day 7. 1,050 clicks and no purchases

121,456 impressions on day 8. 1,083 clicks, no purchases

47,586 impressions on Day 9. 419 Clicks. No orders

My overall conversion rate for video advertisements was 0.9%. TikTok's paid ad formats all result in strong engagement rates (ads average 3% to 12% CTR to site), therefore a 1 to 2% CTR should have been doable.

My one-week experiment yielded 8,151 ad clicks but no sales. Even if 0.1% of those clicks converted, I should have made 8 sales. Even companies with horrible web marketing would get one download or trial sign-up for every 8,151 clicks. I knew that because my advertising were in English, I had no impressions in the main EU markets (France, Spain, Italy, Germany), and that this impacted my conversion potential. I still couldn't believe my numbers.

I dug into the statistics and found that Tiktok's stats didn't match my store traffic data.

Looking more closely at the numbers

My ads were approved on April 26 but didn't appear until April 27. My store dashboard showed 440 visitors but 1,004 clicks on Tiktok. This happens often while tracking campaign results since different platforms handle comparable user activities (click, view) differently. In online marketing, residual data won't always match across tools.

My data gap was too large. Even if half of the 1,004 persons who clicked closed their browser or left before the store site loaded, I would have gained 502 visitors. The significant difference between Tiktok clicks and Big Cartel store visits made me suspicious. It happened all week:

Day 1: 440 store visits and 1004 ad clicks

Day 2: 482 store visits, 987 ad clicks

3rd day: 963 hits on ads, 452 store visits

443 store visits and 1,050 ad clicks on day 4.

Day 5: 459 store visits and 1,184 ad clicks

Day 6: 430 store visits and 1,050 ad clicks

Day 7: 409 store visits and 1,031 ad clicks

Day 8: 166 store visits and 418 ad clicks

The disparity wasn't related to residual data or data processing. The disparity between visits and clicks looked regular, but I couldn't explain it.

After the campaign concluded, I discovered all my creative assets (the videos) had a 0% CTR and a $0 expenditure in a separate dashboard. Whether it's a dashboard reporting issue or a budget allocation bug, online marketers shouldn't see this.

Tiktok can present any stats they want on their dashboard, just like any other platform that runs advertisements to promote content to its users. I can't verify that 895,687 individuals saw and clicked on my ad. I invested $200 for what appears to be around 900K impressions, which is an excellent ROI. No one bought a t-shirt, even an unattractive one, out of 900K people?

Would I do it again?

Nope. Whether I didn't make sales because Tiktok inflated the dashboard numbers or because I'm horrible at producing advertising and items that sell, I’ll stick to writing content and making videos. If setting up a business and ads in a few days was all it took to make money online, everyone would do it.

Video advertisements and dropshipping aren't dead. As long as the internet exists, people will click ads and buy stuff. Converting ads and selling stuff takes a lot of work, and I want to focus on other things.

I had always wanted to try dropshipping and I’m happy I did, I just won’t stick to it because that’s not something I’m interested in getting better at.

If I want to sell t-shirts again, I'll avoid Tiktok advertisements and find another route.